Table of Contents

Important Topic of Chemistry: Entropy

Entropy is both a scientific concept and a measurable physical property that is most commonly associated with disorder, randomness, or uncertainty. The term and concept are used in a variety of fields, ranging from classical thermodynamics, where it was first recognized, to statistical physics’ microscopic description of nature, and to the principles of information theory. It has a wide range of applications in chemistry and physics, biological systems and their relationship to life, cosmology, economics, sociology, weather science, climate change, and information systems, including information transmission in telecommunication.

In 1850, Scottish scientist and engineer Macquorn Rankine used the terms thermodynamic function and heat-potential to describe the thermodynamic concept. One of the leading founders of the field of thermodynamics, German physicist Rudolf Clausius, defined it in 1865 as the quotient of an infinitesimal amount of heat to the instantaneous temperature. He first referred to it as transformation-content, or Verwandlung Inhalt in German, and later coined the term entropy from a Greek word for transformation. Clausius interpreted the concept as disgregation in 1862, referring to microscopic constitution and structure.

Aside from the requirement of not violating the conservation of energy, which is expressed in the first law of thermodynamics, entropy causes certain processes to be irreversible or impossible. Entropy is central to the second law of thermodynamics, which states that the entropy of isolated systems left to spontaneous evolution cannot decrease with time because they always reach a state of thermodynamic equilibrium with the highest entropy.

Entropy is described in two ways: from the macroscopic perspective of classical thermodynamics and from the microscopic perspective central to statistical mechanics. Entropy is traditionally defined in terms of macroscopically measurable physical properties such as bulk mass, volume, pressure, and temperature. Entropy is statistically defined in terms of the statistics of the motions of the microscopic constituents of a system – first classically, for example, Newtonian particles constituting a gas, and later quantum-mechanically (photons, phonons, spins, etc.). The two approaches combine to form a consistent, unified view of the same phenomenon expressed in the second law of thermodynamics, which has universal applicability to physical processes.

Overview about Entropy

Entropy is an important concept that students must understand thoroughly while studying Chemistry and Physics. More importantly, entropy can be defined in a variety of ways and thus applied in a variety of stages or instances, such as a thermodynamic stage, cosmology, and even economics. The concept of entropy essentially refers to the spontaneous changes that occur in everyday phenomena or the universe’s proclivity toward disorder.

Entropy is commonly defined as a measure of a system’s randomness or disorder. In the year 1850, a German physicist named Rudolf Clausius proposed this concept. Aside from the general definition, there are several definitions for this concept. On this page, we will look at two definitions of entropy: the thermodynamic definition and the statistical definition. We do not consider the microscopic details of a system from the standpoint of entropy in thermodynamics. Entropy, on the other hand, is used to describe a system’s behaviour in terms of thermodynamic properties such as temperature, pressure, entropy, and heat capacity. This thermodynamic description took into account the systems’ state of equilibrium.

Meanwhile, the statistical definition developed later focused on the thermodynamic properties defined in terms of the statistics of a system’s molecular motions. The entropy of a molecular system is a measure of its disorder. The following are some other popular interpretations of entropy:

When it comes to quantum statistical mechanics, Von Neumann used the density matrix to extend the concept of entropy to the quantum domain.

It is a measure of a system’s efficiency in transmitting a signal or the loss of information in a transmitted signal when discussing information theory.

Entropy defines the increasing complexity of a dynamical system when it comes to dynamical systems. It also quantifies the average information flow per unit of time.

Entropy is defined as the social decline or natural decay of structure (such as law, organization, and convention) in a social system by sociology.

Entropy is defined in cosmology as the universe’s hypothetical tendency to achieve maximum homogeneity. It specifies that the matter is kept at a constant temperature.

Introduction of entropy as a state function

A state function is a function established for a system that connects numerous state variables or state quantities and is only dependent on the system’s current equilibrium thermodynamic state (e.g., gas, liquid, solid, crystal, or emulsion), not on the path it took to get there. A state function specifies the equilibrium state of a system as well as the type of system. Entropy is a quantifiable physical property that is frequently associated with a state of disorder, unpredictability, or uncertainty. The term and concept are used in a variety of contexts, ranging from classical thermodynamics, where it was first identified, to statistical physics’ microscopic description of nature, to the principles of information theory. It is used in chemistry and physics, biological systems and their relationships to life, cosmology, economics, sociology, weather science, climate change, and information systems, including telecommunications. After all, it’s a state function. It is determined by the system’s condition rather than the path taken. When reactants break down into a greater number of products during chemical processes, entropy increases. The temperature of a system with more unpredictability is higher than that of a system with a lower temperature. These examples demonstrate that as regularity decreases, entropy increases. Entropy is a state function because it depends not only on the beginning and ending states but also on the entropy change between two states, which is integrating tiny entropy changes along a reversible route.

Define entropy

Entropy is a measure of a system’s disorder. It is a broad property of a thermodynamic system, which means that its value varies with the amount of matter present. Entropy is usually denoted by the letter S in equations and has units of joules per kelvin J·K-1 or kg·m2·s-2·K-1. Entropy is low in a highly ordered system.

Entropy is the amount of thermal energy per unit temperature in a system that is not available for useful work. Because work is obtained from ordered molecular motion, entropy is also a measure of a system’s molecular disorder or randomness.

Measuring entropy

A process is defined during entropy change as the amount of heat emitted or absorbed isothermally and reversibly divided by the absolute temperature.

The entropy formula is as follows:

∆S=qrev,iso/T

If we add the same amount of heat at a higher and lower temperature, the randomness will be greatest at the lower temperature. As a result, it implies that temperature is inversely proportional to entropy.

Change in total entropy, ∆Stotal =∆Ssurroundings +∆Ssystem

Total entropy change is the sum of the entropy changes of the system and its surroundings.

If the system loses an amount of heat q at temperature T1 and the surroundings, receive it at temperature T2,

As a result, ∆Stotal Total can be computed.

∆Ssystem =-q/T1 ∆Ssurrounding =q/T2 ∆Stotal =-q/T1+q/T2

If ∆Stotal is positive, the process is uninhibited.

If ∆Stotal is a negative number, the process is not spontaneous.

If ∆Stotal is equal to zero, the process is in equilibrium.

Changes in entropy occur during the isothermal reversible expansion of an ideal gas.

∆S=qrev,iso/T

The first law of thermodynamics states that

∆U=q+w

∆U for the isothermal expansion of an ideal gas

qrev=-Wrev=nRTlnV2/V1

∆S=nRlnV2N1

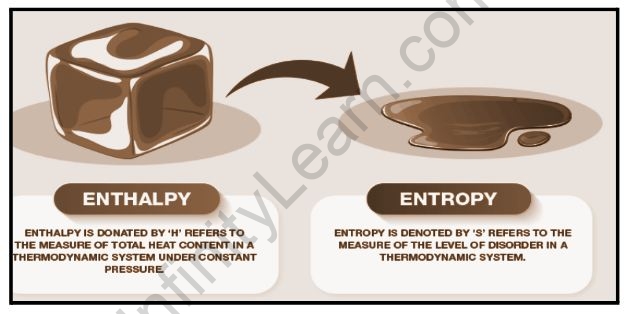

Enthalpy and Entropy

The study of the effects of heat, energy, and work on a system is known as thermodynamics in physics. The entropy of a system is a measurement of its thermal energy per unit temperature. It is the measure of available energy in a closed thermodynamic system and is concerned with determining the molecular disorder, or randomness, of the molecules within the system. The degree of chaos or uncertainty in a system is characterized as entropy. Enthalpy is a key concept in thermodynamics. It refers to the total amount of heat contained in the system. This means that as more energy is added, the enthalpy rises. When energy is released, the system’s enthalpy decreases.

Enthalpy is a measure of total heat present in a thermodynamic system with constant pressure. It is written as ∆H=∆E+P∆V, where E is the internal energy.

In a thermodynamic system, entropy is a measure of disorder. It is denoted by ∆S=∆Q/T. The heat content is Q, and the temperature is T.

Entropy is a measure of the disorder of a collection of particles’ energy. This concept stems from thermodynamics, which describes the heat transfer mechanism in a system. This phrase is derived from Greek and means “a turning point.” Rudolf Clausius, a German physicist, coined the phrase. Through entropy, he documented a precise form of the Second Law of Thermodynamics. It states that any irreversible spontaneous change in an isolated system always leads to increased entropy. When we place a block of ice on a stove, for example, these two become an integral part of a single isolated system. As a result, the ice melts, and entropy rises. Because all spontaneous processes are irreversible, we can say that the universe’s entropy is increasing. Furthermore, it is possible to conclude that more energy will be unavailable for work. As a result, the universe is said to be “running down.”

Joules, per Kelvin, is the SI unit of entropy. It is also written as ∆S, and its equation is as follows.

∆Ssystem =Qrev /T

Where S denotes the change in entropy, Q denotes the inverse of heat, and T denotes temperature on the Kelvin scale.

Enthalpy and entropy are two important thermodynamic terms. In a reaction, they are partially related because the fundamental rule of any reaction is the release or absorption of heat or energy. A new product is formed through a standard reaction of several compounds based on these two factors.

Also read: Gay Lussac’s Law

FAQs

Why is the entropy of water constant at the triple point?

The triple point denotes a situation in which the solid, liquid, and gas phases are all in equilibrium. The gas phase's entropy is greater than the liquid phase's entropy.

Is entropy increased by freezing?

Because water has higher entropy than ice, entropy favors melting. Freezing is an exothermic process, meaning that energy is lost from the water and dissipated into the surrounding environment. As a result, as the surroundings become hotter, they gain more energy and the entropy of the surroundings increases.

Entropy can be negative?

If entropy represents the amount of disorder, then negative entropy indicates that something has less disorder or more order. The shirt is now less disordered and has negative entropy, but you are more disordered, so the system as a whole has either zero or positive entropy.